Inclusive GAN:

Improving Data and Minority Coverage in Generative Models

ECCV 2020

1. University of Maryland

2. Max Planck Institute for Informatics

3. University of California, Berkeley

4. CISPA Helmholtz Center for Information Security 5. Institute for Advanced Study 6. Google

4. CISPA Helmholtz Center for Information Security 5. Institute for Advanced Study 6. Google

Abstract

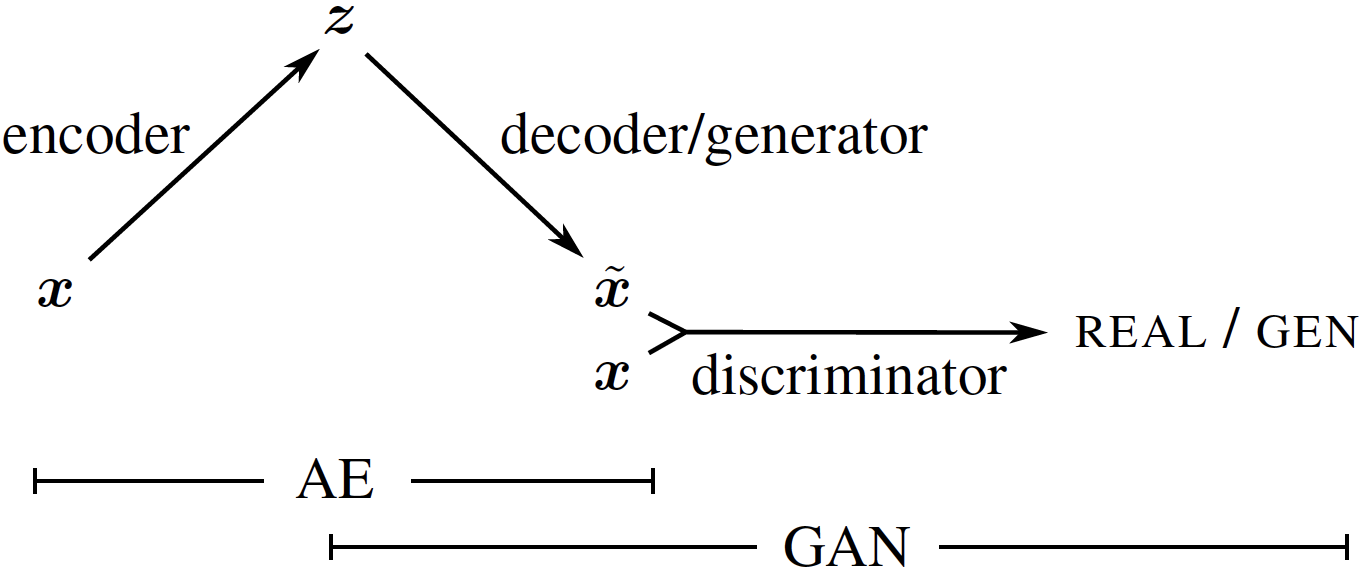

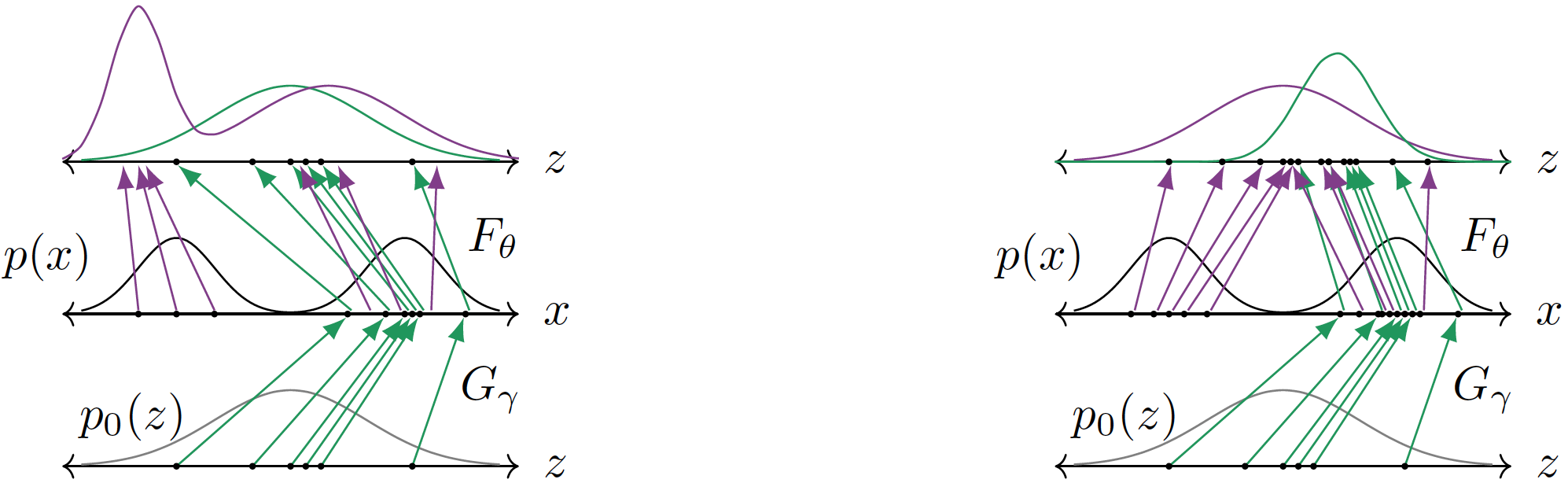

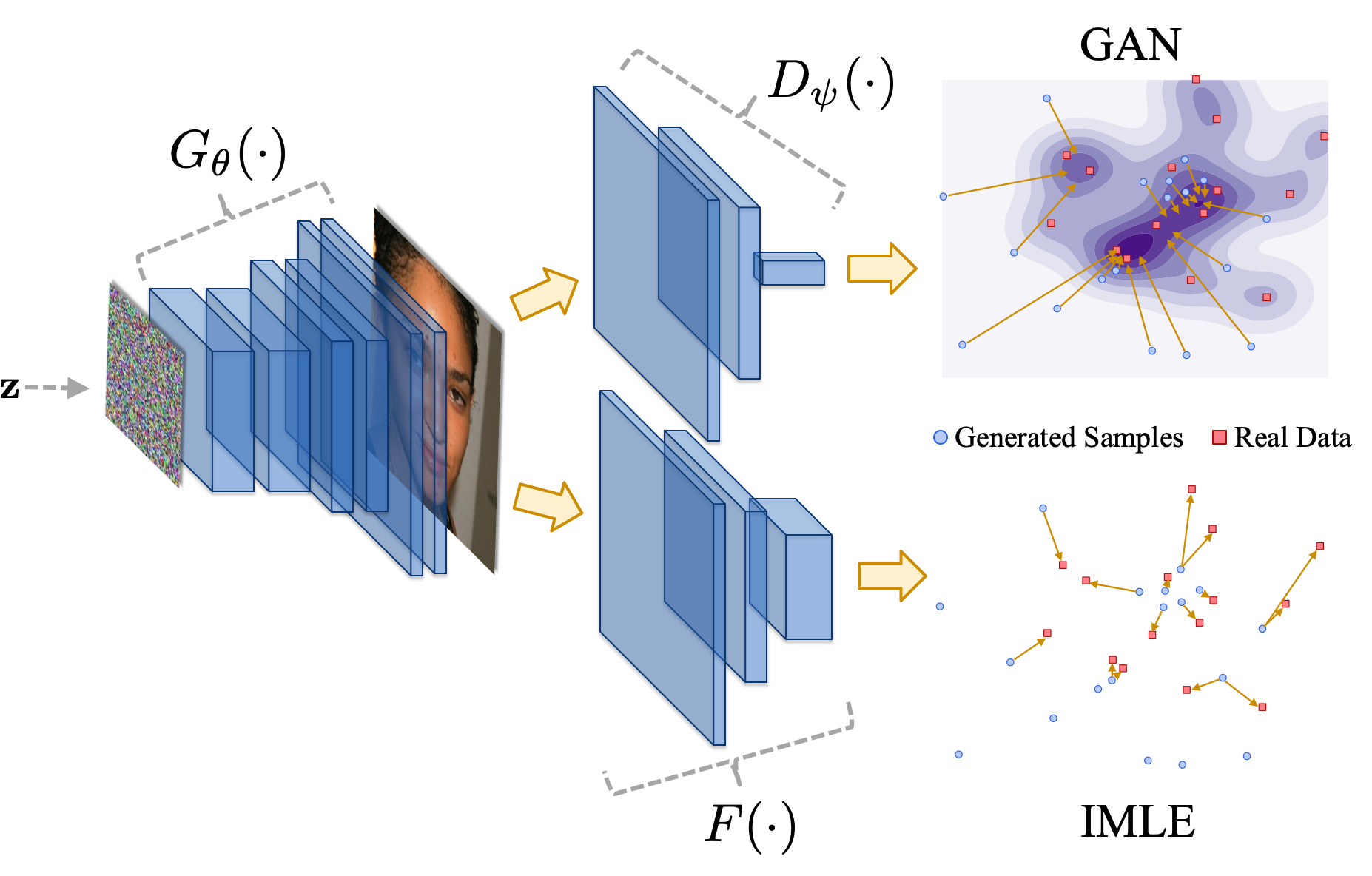

Generative Adversarial Networks (GANs) have brought about rapid progress towards generating photorealistic images. Yet the equitable allocation of their modeling capacity among subgroups has received less attention, which could lead to potential biases against underrepresented minorities if left uncontrolled. In this work, we first formalize the problem of minority inclusion as one of data coverage, and then propose to improve data coverage by harmonizing adversarial training with reconstructive generation. The experiments show that our method outperforms the existing state-of-the-art methods in terms of data coverage on both seen and unseen data. We develop an extension that allows explicit control over the minority subgroups that the model should ensure to include, and validate its effectiveness at little compromise from the overall performance on the entire dataset.

Demos

Optimization for image reconstruction

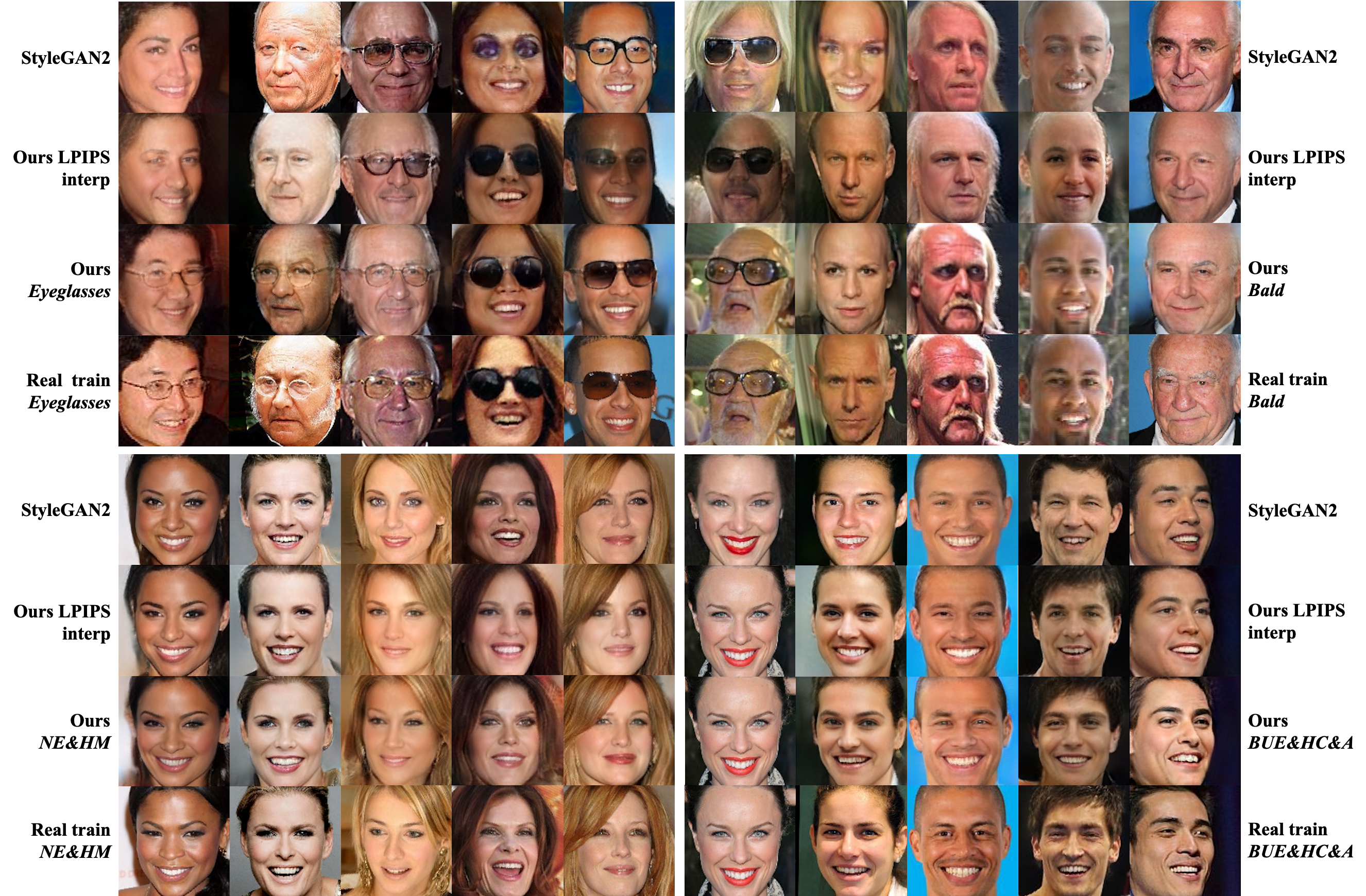

Minority reconstruction

Interpolation from majority to minority

Eyeglasses

Majority real StyleGAN2 Ours general Ours minority Minority real

Bald

Majority real StyleGAN2 Ours general Ours minority Minority real

Narrow_Eyes&Heavy_Makeup

Majority real StyleGAN2 Ours general Ours minority Minority real

Bags_Under_Eyes&High_Cheekbones&Attractive

Majority real StyleGAN2 Ours general Ours minority Minority real

Video

Press coverage

thejiangmen Academia News

Citation

@inproceedings{yu2020inclusive,

author={Yu, Ning and Li, Ke and Zhou, Peng and Malik, Jitendra and Davis, Larry and Fritz, Mario},

title={Inclusive GAN: Improving Data and Minority Coverage in Generative Models},

journal={European Conference on Computer Vision (ECCV)},

year={2020},

}

Acknowledgement

We thank Richard Zhang and Dingfan Chen for constructive advice. This project was partially funded by DARPA MediFor program under cooperative agreement FA87501620191 and by ONR MURI N00014-14-1-0671. Any opinions, findings, conclusions, or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the DARPA or ONR MURI.